Preference Elicitation for Decision Making Assistance in Social Work-Related Interventions

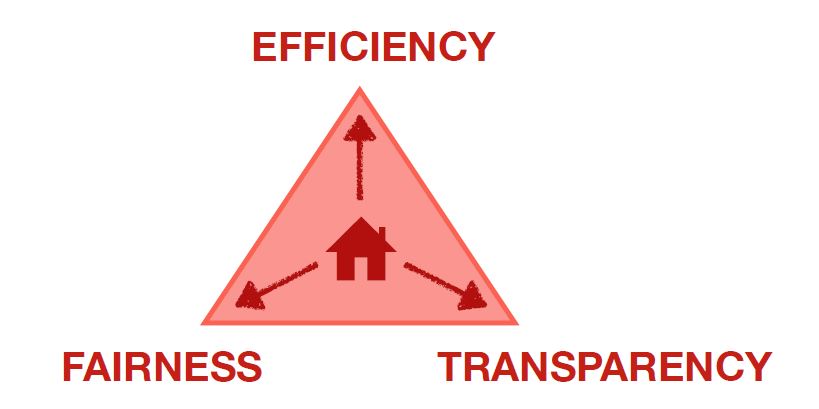

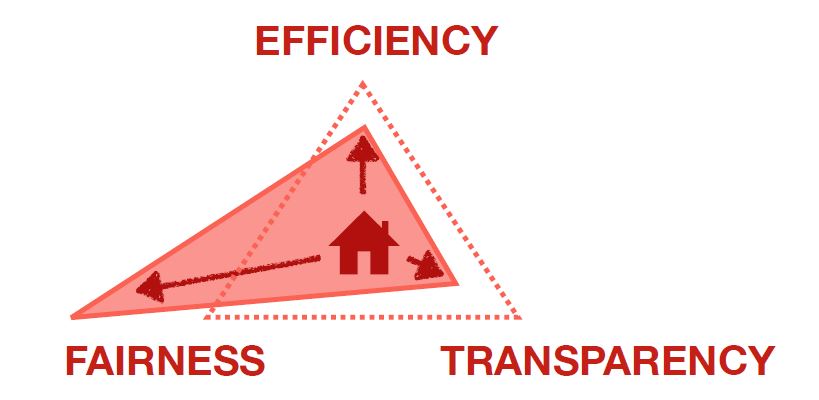

Each individual within a given committee, organization, or community will have different opinions on which characteristics or variables should be prioritized for determining who should receive social services. Thus, it is important to be able to assess and understand what are the most important variables for that individual when making a decision, while also balancing fairness, efficiency and transparency. These findings are then incorporated into models for decision-making and services to benefit the greater community. In order to assess the wide variety of possibilities while balancing fairness, efficiency, and transparency, we created an algorithm to determine a series of pairwise comparisons that measure an individuals’ preferences–this is called preference elicitation.

Goals

We want to learn the priorities of stakeholders for a given decision-making problem in order to create more fair, accountable, and transparent decisions.

Methods

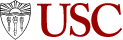

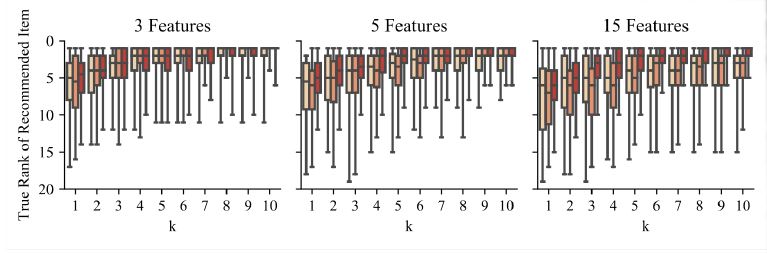

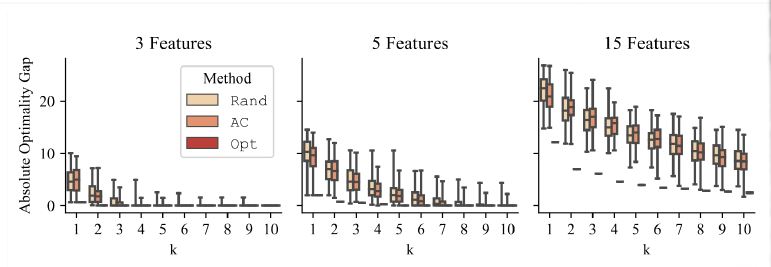

The algorithm we designed to assess stakeholders’ preferences includes a large bucket of many questions that could be asked. Using adaptive preference elicitation, the algorithm determines the subsequent questions based on the individual’s responses to the current question. Each question is in the form of a pairwise comparisons with small differences in the variables/characteristics. Response options are “I strictly prefer A to B,” “I am indifferent between A and B,” and “I strictly prefer B to A.”

Example Use Cases

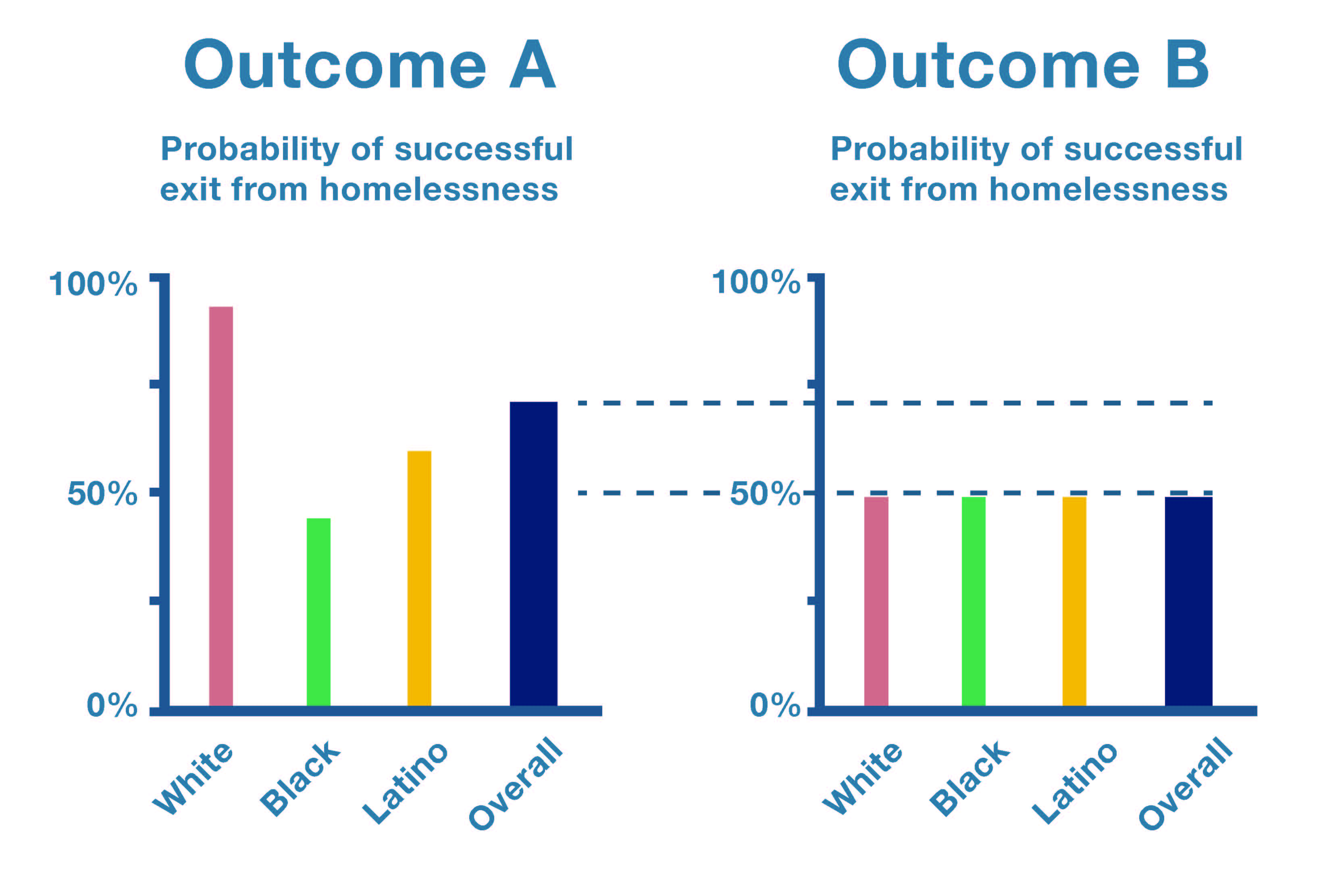

Motivated from the problem of designing policies for allocating scarce housing resources that meet the needs of policymakers at the Los Angeles Homeless Services Authority (LAHSA), we have devised a novel model and algorithm for actively eliciting the preferences of policy- and decision-makers in an optimal fashion, when only a limited number of questions can be asked. Specifically, we are investigating which characteristics are most important while considering fairness versus efficiency. This is done by asking stakeholders their preferences using pairwise comparisons. For example, which is more important–X or Y? To assess these preferences, we devised an algorithm that strategically select items, out of a potentially huge number of questions in order to whittle down the most vital considerations.

More info on the housing allocation project can be found here.

How much efficiency are we willing to sacrifice for fairness/transparency? We want to learn the stakeholders’ preferences while balancing fairness, equity, and efficiency.

We’re using AI to determine which questions we should ask. Our algorithm strategically selects pairwise comparison questions from a resource containing a large number of relevant questions in order to determine the stakeholders’ preferences for what they determine to be the most important.