Land Use/Land Cover Mapping

Satellite imagery of the world is collected daily and can provide many useful insights into what is happening on the ground. In fact, the United Nations has estimated that, “approximately 20% of the SDG [Sustainable Development Goals] indicators can be interpreted and measured either through direct use of geospatial data itself or through integration with statistical data” [1]. In order to derive insights from satellite imagery, we must first process the imagery into a data product that is meaningful with respect to the problems that we want to solve. For example land use/land cover (LULC) data is a labeling of satellite imagery into categories such as: water, tree canopy, barren land, and built up surfaces. This data is useful in downstream sustainability applications, such as planning riparian buffer repair projects [2]. However it is also extremely costly to create manually. This is where machine learning can play a vital role. We aim to advance machine learning techniques in order to derive useful insights from satellite imagery, at scale, with the goal of tackling pressing problems in computational sustainability.

Goals

To develop machine learning models and methodology that can process satellite imagery into useful data and insights that support downstream decision making. The development of these models should be done with decision makers, in order to provide the best possible data. This development process exposes challenges in machine learning such as that of domain adaptation, active learning, and model ensembling.

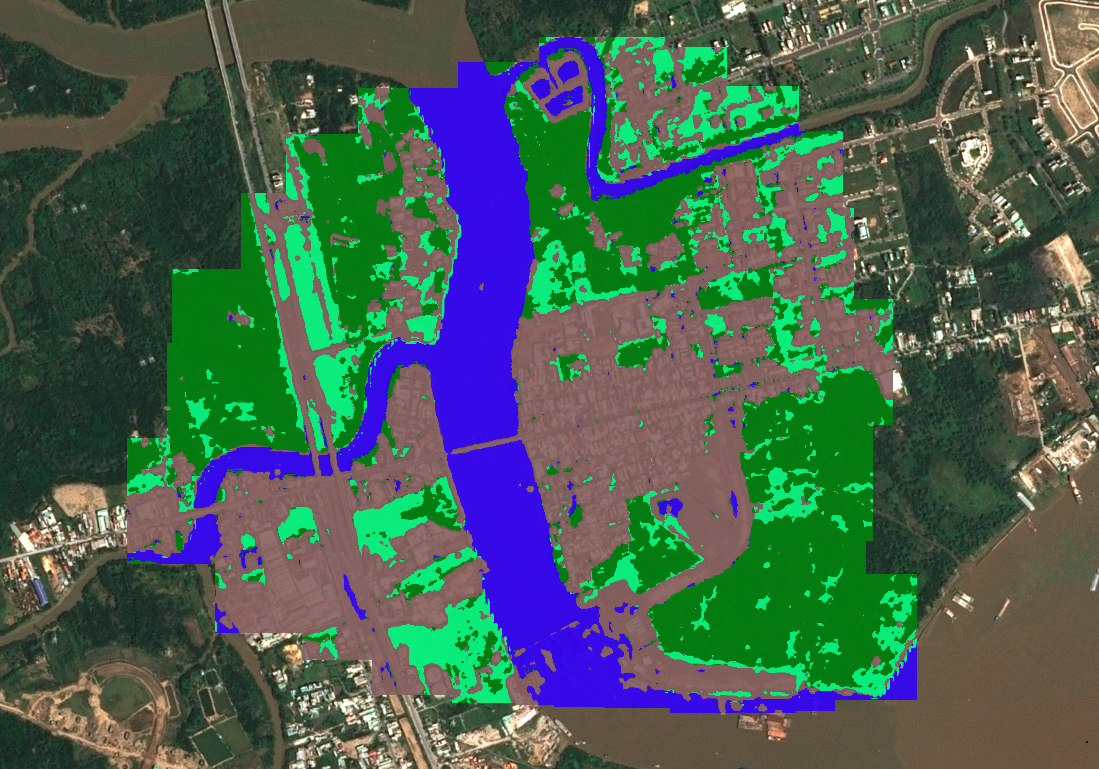

Close up view of part of the classification from the web based classification tool.

Methods

Land cover mapping

We treat land cover mapping as an image segmentation problem and train convolutional neural networks to segment high-resolution satellite and aerial imagery into different land cover categories. While this approach provides good results near areas where we have existing high-resolution labeled data, model performance degrades in new areas due to variations in the imagery (as the same land cover class may look different in different locations). In order to overcome this problem, we develop new methods for training land cover models with more-plentiful low-resolution labels and that incorporate inputs from different resolutions and points in time.

We find that these methods improve the spatial generalization of our models, and subsequently train and run models over the continental United States, producing the first 1m resolution land cover map covering over 8 trillion pixels of imagery.

See our code at: https://github.com/calebrob6/land-cover

See our dataset at: http://lila.science/datasets/chesapeakelandcover

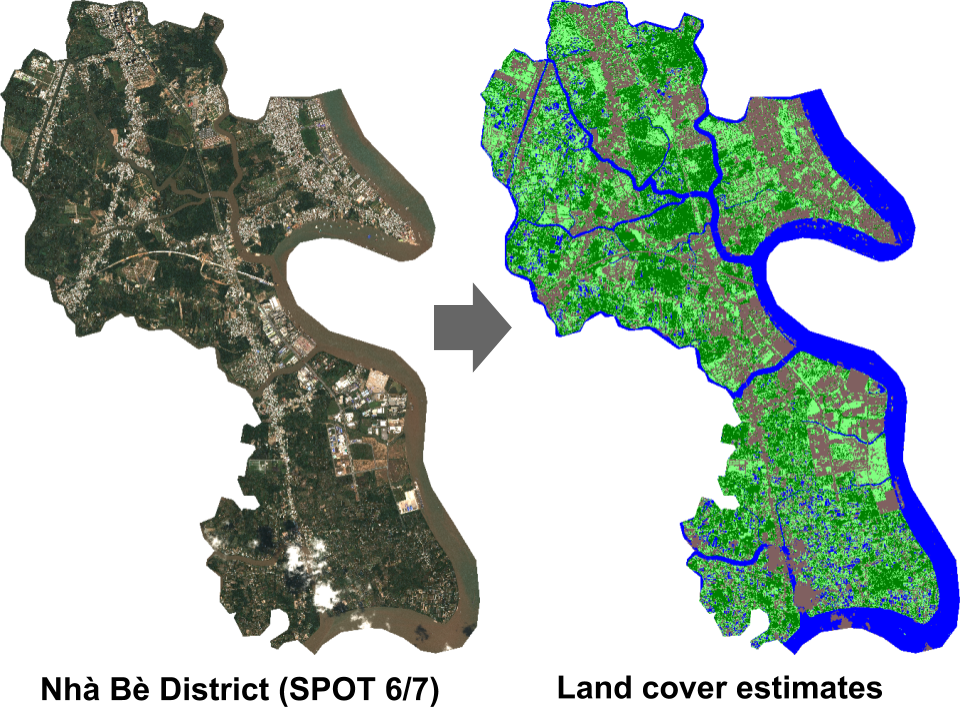

Land cover classification of 1.5m resolution imagery covering the Nhà Bè district in Ho Chi Minh City, Vietnam.

Human population density estimation

To jointly answer the questions of “where do people live?” and “how many people live there?” we propose a deep learning model for creating high-resolution population estimations from satellite imagery. Specifically, we train convolutional neural networks to predict population in the USA at a 0.01° x 0.01° resolution grid from 1-year composite Landsat imagery.

We find that aggregating our model’s estimates gives comparable results to the Census county-level population projections and that the predictions made by our model can be directly interpreted, which gives it advantages over traditional population disaggregation methods.

In general, our model is an example of how machine learning techniques can be an effective tool for extracting information from inherently unstructured, remotely sensed data to provide effective solutions to social problems.

See our slides at: https://deeppop.github.io/resources/robinson2017-deeppop-slides.pdf

See our code at: https://github.com/deeppop

Bistra Dilkina

Caleb Robinson

Microsoft AI for Earth

- UN Global Geospatial Information Management. Global and Complementary (Non-authoritative) Geospatial Data for SDGs: Role and Utilisation. http://ggim.un.org/documents/Report_Global_and_Complementary_Geospatial_Data_for_SDGs.pdf. Accessed 2019.

- Chesapeake Conservancy. Enhanced Flow Path Data. https://chesapeakeconservancy.org/conservation-innovation-center/high-resolution-data/enhanced-flow-paths/. Accessed 2019.